See here for the Traceroute assignment

The concept of a traceroute was entirely new to me prior to this course. I suppose I knew that when I searched for “espn.com” in my browser that my request would be routed through a number of intermediaries. I didn’t, however, spend much time thinking about the physical and digital entities along those hops, nor did I know that we had the power in our terminals to begin analyzing the physical geography of our own internet behavior via the traceroute command.

I chose to see what a path to “espn.com” looks like from my home device and from NYU. I’ve been going to espn.com since I think the website started; I was a religious Sportscenter viewer as a child, and still have a daily habit of checking the sports headlines. If only I had captured a traceroute from 1995-era espn.com up until now -- it would have been even more fascinating to see how things have changed!

NYU -> ESPN

My search from NYU to ESPN had 22 hops, with 6 of the stops returning a ***. The first 13 stops had NYU or .edu domains.

1 10.18.0.2 (10.18.0.2) 2.993 ms 2.020 ms 2.111 ms 2 coregwd-te7-8-vl901-wlangwc-7e12.net.nyu.edu (10.254.8.44) 23.613 ms 11.542 ms 4.040 ms 3 nyugwa-vl902.net.nyu.edu (128.122.1.36) 2.477 ms 2.274 ms 2.618 ms 4 ngfw-palo-vl1500.net.nyu.edu (192.168.184.228) 3.235 ms 2.935 ms 2.744 ms 5 nyugwa-outside-ngfw-vl3080.net.nyu.edu (128.122.254.114) 17.543 ms 2.714 ms 2.862 ms 6 nyunata-vl1000.net.nyu.edu (192.168.184.221) 3.050 ms 3.094 ms 2.833 ms 7 nyugwa-vl1001.net.nyu.edu (192.76.177.202) 3.047 ms 3.383 ms 2.922 ms 8 dmzgwa-ptp-nyugwa-vl3081.net.nyu.edu (128.122.254.109) 3.584 ms 3.769 ms 3.484 ms 9 extgwa-te0-0-0.net.nyu.edu (128.122.254.64) 3.545 ms 3.299 ms 3.292 ms 10 nyc-9208-nyu.nysernet.net (199.109.5.5) 4.031 ms 4.078 ms 3.842 ms 11 i2-newy-nyc-9208.nysernet.net (199.109.5.2) 4.124 ms 3.686 ms 3.505 ms 12 ae-3.4079.rtsw.wash.net.internet2.edu (162.252.70.138) 8.970 ms 9.097 ms 9.792 ms 13 ae-0.4079.rtsw2.ashb.net.internet2.edu (162.252.70.137) 9.741 ms 9.272 ms 9.777 ms

Once leaving the NYU space, the first IP address in Virginia is associated with Amazon.com -- presumably one of the AWS servers.

14 99.82.179.34 (99.82.179.34) 13.231 ms 10.645 ms 9.469 ms 15 * * *

The next 3 are also associated with Amazon, this time in Seattle:

16 52.93.40.229 (52.93.40.229) 10.573 ms

52.93.40.233 (52.93.40.233) 9.719 ms

52.93.40.229 (52.93.40.229) 9.397 ms

Before a number of obfuscated hops and the end CDN.

17 * * * 18 * * * 19 * * * 20 * * * 21 * * * 22 server-13-249-40-8.iad89.r.cloudfront.net (13.249.40.8) 9.340 ms 9.164 ms 9.258 ms

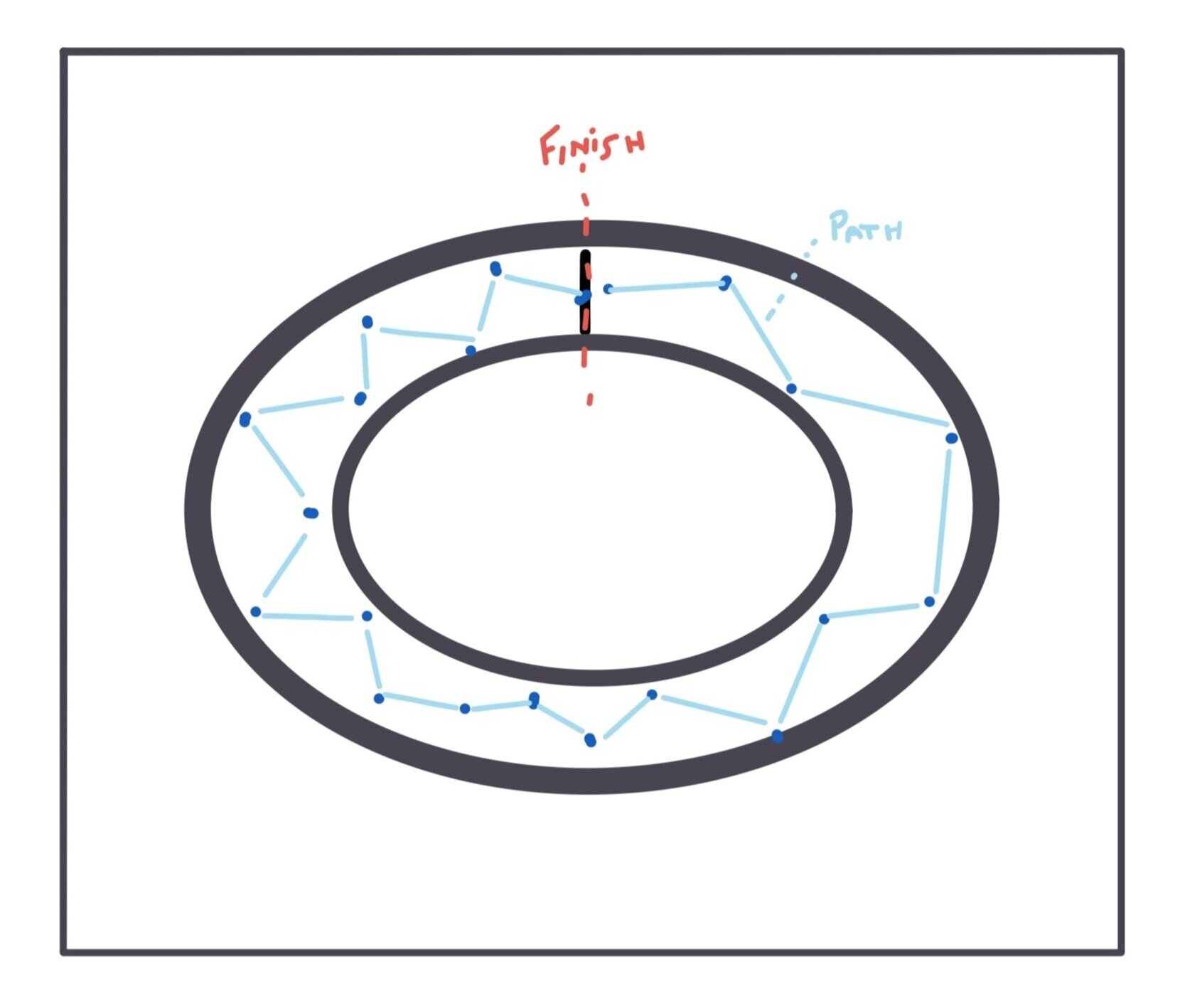

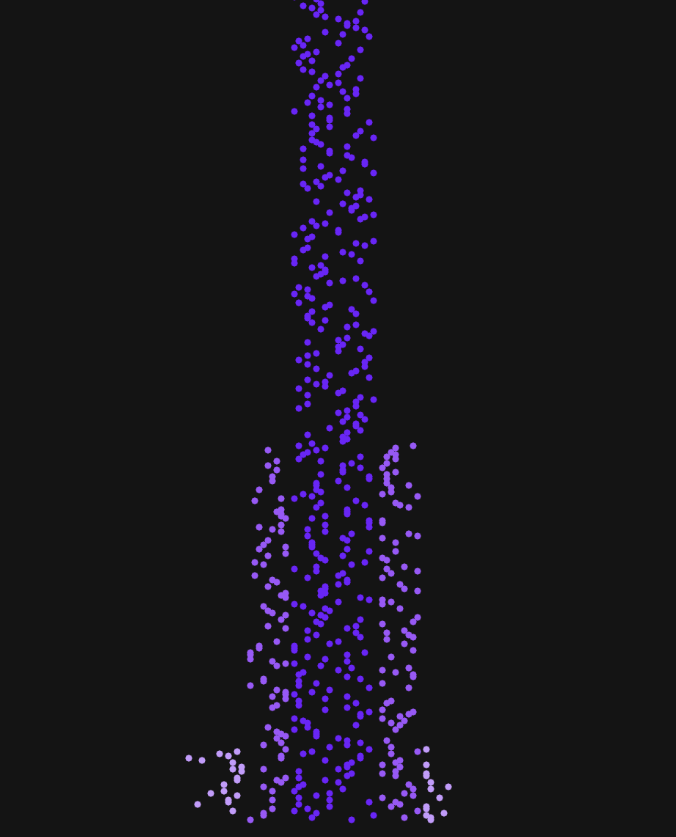

I used the traceroute-mapper tool to visualize these hops, but it looks like their data doesn’t quite match the data I found with https://www.ip2location.com/demo; there is no Seattle in sight on the traceroute-mapper rendering! Who should we believe here?

Home -> ESPN

I live in Manhattan, so I am not very physically far from the ITP floor. However, my home network and the NYU network would be expected to have pretty different hoops to jump through, so to speak, to get to the end destination. That, in fact, proved true with the traceroute.

This route had 29 stops, with 12 stops returning ***. The first IP address is owned by Honest Networks, my internet provider. This is my IP address.

1 router.lan (192.168.88.1) 5.092 ms 2.481 ms 2.140 ms 2 38.105.253.97 (38.105.253.97) 4.323 ms 2.554 ms 3.187 ms

The next 3 are all private IP LANs -- I’m guessing these are also associated with my local device.

3 10.0.64.83 (10.0.64.83) 2.247 ms 2.302 ms 2.181 ms

4 10.0.64.10 (10.0.64.10) 3.127 ms 3.857 ms 3.588 ms

5 10.0.64.0 (10.0.64.0) 3.653 ms

10.0.64.2 (10.0.64.2) 2.214 ms 3.188 ms

The next IP address is associated with PSI-Net in Washington, DC, which appears to be a tier-1 optical provider. My guess is that Honest piggybacks off of this network, which seems to be larger and a legacy company now owned by Cogent.

6 38.30.24.81 (38.30.24.81) 2.673 ms 2.573 ms 2.061 ms

Then we see a whole lot of cogentco - owned domains, followed by IP addresses in hop 10 that are both associated again with PSInet.

7 te0-3-0-31.rcr24.jfk01.atlas.cogentco.com (154.24.14.253) 2.053 ms 2.771 ms

te0-3-0-31.rcr23.jfk01.atlas.cogentco.com (154.24.2.213) 2.712 ms

8 be2897.ccr42.jfk02.atlas.cogentco.com (154.54.84.213) 2.846 ms

be2896.ccr41.jfk02.atlas.cogentco.com (154.54.84.201) 2.280 ms 3.010 ms

9 be2271.rcr21.ewr01.atlas.cogentco.com (154.54.83.166) 3.106 ms

be3495.ccr31.jfk10.atlas.cogentco.com (66.28.4.182) 3.281 ms

be3496.ccr31.jfk10.atlas.cogentco.com (154.54.0.142) 3.611 ms

10 38.140.107.42 (38.140.107.42) 3.374 ms 3.468 ms

38.142.212.10 (38.142.212.10) 3.910 ms

We’re finally to the AWS IP addresses now for the remainder of the hops, both in Virginia and Seattle. Given how many hops there are and how prevalent AWS is for so many hosted sites, it’s hard to draw conclusions about what companies/services these hops might correspond to. But I suppose it’s another indication of just how dominant AWS is.

11 52.93.59.90 (52.93.59.90) 6.790 ms

52.93.59.26 (52.93.59.26) 4.047 ms

52.93.31.59 (52.93.31.59) 3.876 ms

12 52.93.4.8 (52.93.4.8) 3.708 ms

52.93.59.115 (52.93.59.115) 11.582 ms 6.948 ms

13 * * 150.222.241.31 (150.222.241.31) 3.954 ms

14 * * 52.93.128.175 (52.93.128.175) 3.131 ms

15 * * *

16 * * *

17 * * *

18 * * *

19 * * *

20 * * *

21 * * *

22 * * *

23 * * 150.222.137.1 (150.222.137.1) 3.128 ms

24 150.222.137.7 (150.222.137.7) 2.671 ms

150.222.137.1 (150.222.137.1) 3.558 ms

150.222.137.7 (150.222.137.7) 2.685 ms

25 * * *

26 * * *

27 * * *

28 * * *

29 * server-13-225-229-96.jfk51.r.cloudfront.net (13.225.229.96) 3.178 ms 3.992 ms

Conclusion

It really would be fascinating to see how the geographical paths of a website visit has changed over the last 25 years, when I began using the internet. A cursory search for tools or research that looked into this wasn’t fruitful, although I’m sure I could look harder and find something related. However, if you know of something along these lines, I’d love to see it!

My own analysis of my visits to espn.com, while not leading to any major revelations, put the idea of physical networks front and center in my mind, In combination with our virtual field trip, I feel like I have a much more tangible understanding of the physical infrastructure of the web than I did just a few weeks ago.